On March 2th, the past Friday, I have released the initial code for a new FOSS project. The current code is hosted at github. LogRev is a log reviser tool, it extracts statistics from — currently — the Apache Access Logs. The initial design only supports few grouping queries but how it was coded will allow some interesting features that I will explain in this article. I hope that you will enjoy the design of this tool.

Well, the first idea is to have a dynamic parser, a configurable tokenizer and information extractor that will allow you to make some queries related to any application log. Currently it uses Parsec as combinatoric static parser for one kind of log entry — Apache Access Logs as was explained on the previous paragraph — and due to the nature of a combinatoric parser, will allow the creation of dynamically placed combinatoric tokenizers allowing the dynamic parsing of various kinds of entries. This is why this project was implemented in Haskell rather than using other language, despite Parsec is implemented in various languages like C++.

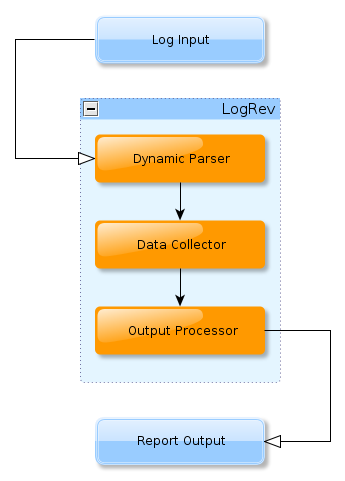

So, we have a dynamic parser that will be configured from a DSL, where I’m very close to finish its specification, followed by an Action Stack, where the action stack represents the dynamic placement of sequentially executed combinators to extract the statistics information that you want, with both, predefined data collector combinators and pluggable modular data collectors, where the data collectors will be specified on the DSL for this tool, and probably I will call it LRS or Log Revision Specification.

Finally there is the output method, where it currently is supporting graphic charts and plain text as output. On the future, it will support other kind of output, also specified in LSR.

Due to size of server logs, where many of them are really huge, or many times we need to process large amount of data, I have decided to use Haskell as the main language, because it supports very well the usage of combinators due to its type system and supported abstractions, also Haskell supports very well byte code compilation making the code faster enough to 5000 lines of log lines in 1 second, generating the plain text output for two reports, the status report and the country report.

08:00 [dmw@www:3 logrev-sample]$ wc -l main.log

5000 main.log

08:00 [dmw@www:3 logrev-sample]$ logrev --input=./main.log --output=report

Processing: ./main.log

Status:

200: 4834 9426271 96.68 96.10

206: 3 107131 0.06 1.09

301: 4 733 0.08 0.01

302: 18 7568 0.36 0.08

403: 68 11391 1.36 0.12

404: 73 252012 1.46 2.57

Country:

AUS: 5 32936 0.10 0.34

CHL: 4516 6933671 90.32 70.69

CHN: 90 910020 1.80 9.28

DEU: 4 28591 0.08 0.29

ESP: 203 174777 4.06 1.78

RUS: 45 477554 0.90 4.87

UKR: 4 518 0.08 0.01

USA: 133 1217649 2.66 12.41

real 0m1.104s

user 0m0.964s

sys 0m0.092s

08:01 [dmw@www:3 logrev-sample]$ ls -l *.png

-rw-rw-r-- 1 dmw dmw 13085 2012-03-05 08:01 report_country.png

-rw-rw-r-- 1 dmw dmw 12031 2012-03-05 08:01 report_status.png

Also the graphic chart output is very nice, thanks the Haskell Charts hackage. And here you have sample output.

At the very bottom of the linked page is a python worker syslog server that can be hacked to do some awesome things… I am using it for a largish OSS project. I ended up rewriting a syslog server and fabric agent for my evil purposes. For live graphs and charts processing.org is always nice but there is now http://code.google.com/p/pyprocessing/ that is looking very cool.

http://wiki.loggly.com/pythonlogging